Intriguing!

I enjoyed your video and beleeve hat your work is important. At the same time, I was missing the part where you tell s why we should care about your research. I did find some of that in your poster, with Asperger’s being the best candidate for research motivation. Could you please say a bit more about how such a tool might Eli to better peoples lives (as opposed to bettering their gaming experiences)?

Thank you.

-

-

Thanks for the comment! There are a huge list of applications for this type of work, aside from poker.

– Security/lie detection. Like Fox’s TV show, Lie to Me (Tim Roth plays the real life Paul Ekman, who is a very influential person in my field), but we want to do this automatically using a computer. The deception detector in Ocean’s 13 is another example for what I’m doing. In that movie, they had a facial expression recognition system that detected cheating.

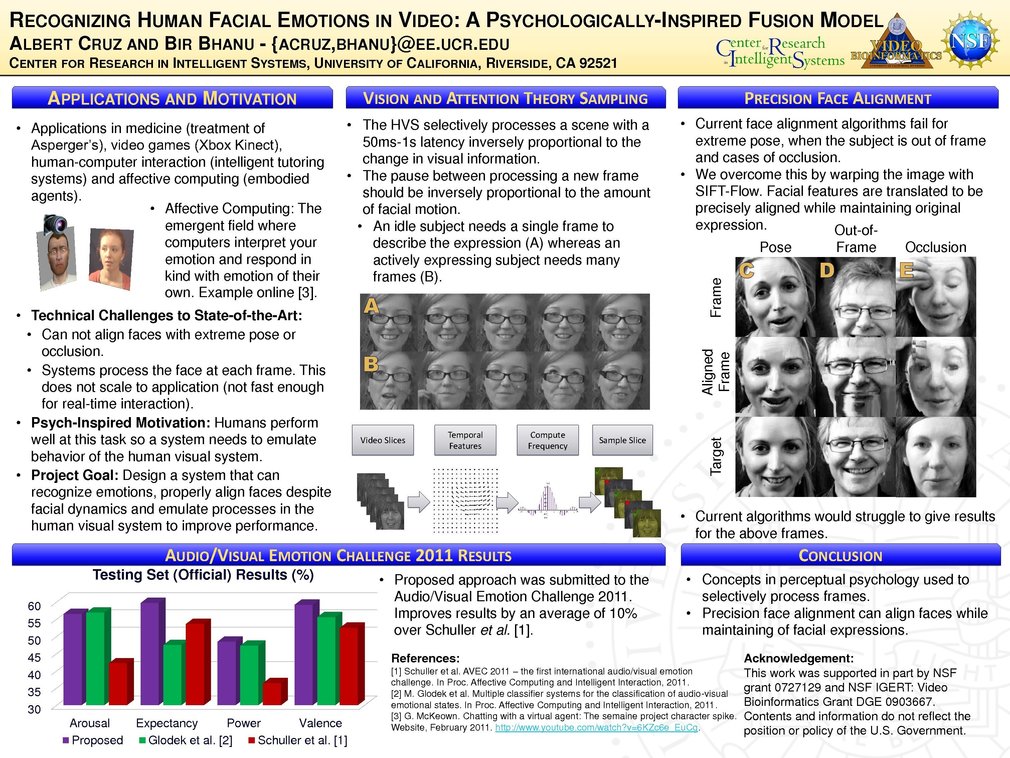

– Non-verbal communication with computers (human-computer interaction, intelligent tutoring systems). When two humans communicate with each other, they’re going beyond speech. Non-verbal communication plays a very important part in this. Current communication with computers is limited to your keyboard and mouse. Imagine a computer where you can dismiss e-mail spam with a hand wave. Remote control using facial expressions and gestures. In a field called Affective Computing, computers (embodied agents) project expressions back to you, to further facilitate non-verbal communication.

- Medical applications. We already mentioned Asperger’s. The transporters is an example of projecting expressions to help Autistic children understand emotion. We would want a robotic system to do this automatically (it would verify a user’s facial expressions and project them as well). Another example would be detection of pain in infants. This is a difficult thing to do; at this stage their verbal feedback is unreliable. An ideal system would be able to detect expressions to determine whether or not the infant is in pain or not. -

Great video and research. How expensive would this technology be if applied to medicine and other sciences?

-

Thanks for the comment! The example I gave in the video uses a computer screen to display an embodied agent. An example of this would be GRETA and the Sensitive Artificial Learner. That type of system would cost the price of the computer you intend to run the system on (and a projector, maybe).

Peter Robinson’s group is using a robotic head to project expressions here. I can’t say how much that type of system would cost.

-

Great presentation

-

Thanks!

-

Great job on the video, you incorporated a lot of information in only three minutes in a very organized way.

-

Further posting is closed as the event has ended.

Judges and Presenters may log in to read queries and replies.