Very nice poster and video! In the introduction to your poster you ask, “Do we interact with others based on how similar or different their movements are from our own?” Have you collected any data to address this question? If not, do you have any hypotheses based on the results of this first study?

- Gwendolyn Johnson

- http://www.igert.org/profiles/4684

- Graduate Student

- Presenter’s IGERT

- Rutgers University

- Nick Ross

- http://www.igert.org/profiles/1498

- Graduate Student

- Presenter’s IGERT

- Rutgers University

- Elio Santos

- http://www.igert.org/profiles/1210

- Graduate Student

- Presenter’s IGERT

- Rutgers University

- Polina Yanovich

- http://www.igert.org/profiles/4181

- Project Associate

- Presenter’s IGERT

- Rutgers University

Choice

-

-

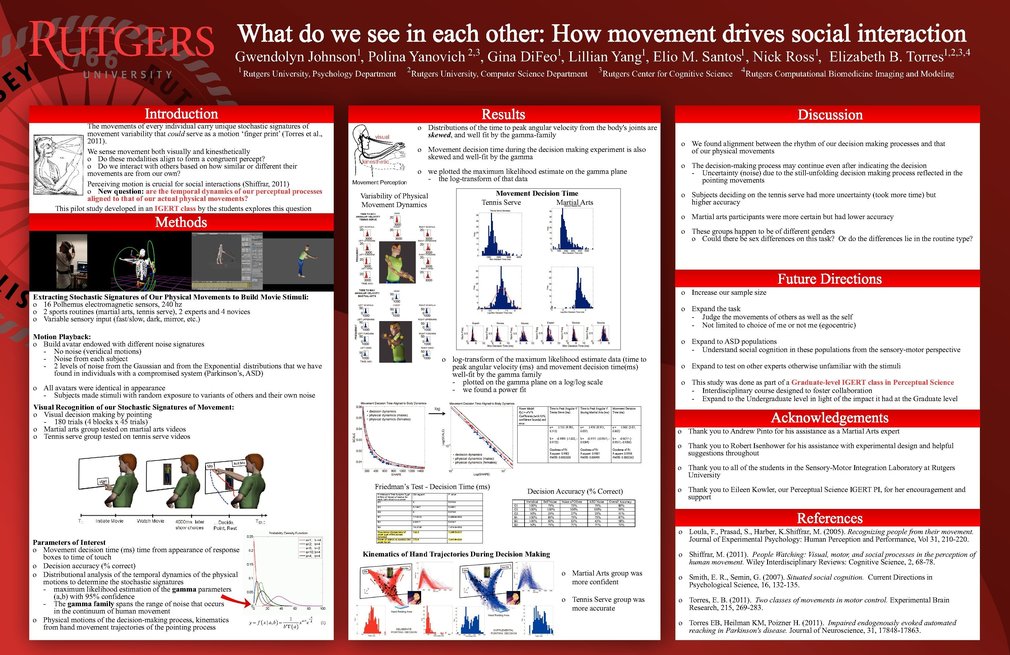

Not yet, this is our first step towards doing that. We hypothesize that if an individual cannot identify his or her own motions and discriminate them from noisy variants, it is likely that that individual will not be able to properly discriminate between the motions of others either. The next phase is to modify the experiment so that participants identify the motion (if they do not think it belongs to them) and to discriminate between the motions of their familiar colleagues. We also plan to test this hypothesis in individuals with autism spectrum disorders because we have found that their stochastic signatures of physical movement variability are extremely far apart from ours, suggesting that their physical movement variability does not map at all onto ours. What is more interesting yet is that their kinesthetic sensing of their own motions is also extremely different from ours. If the map constructed by kinesthetically sensing their own physical movements aligns onto how they visually sense their motions, that map has nothing to do with ours. Thus we expect that any such map for them not going to align with ours. We will soon find out.

-

certainly one of the most enjoyable presentations to watch. I am a Blender 3D CG hobbyist, so it was interesting to see the software in use for your avatars.

Do you think the garments needed (gloves, wires, headgear) had any influence on the motion of the actors? Have you ever considered using Microsoft’s Kinect SDK to get mocap data?

PS— just posted to Blender Artists… you will probably win now.

-

It is a very nice idea, but we have high resolution sensors (240Hz) which allow us to study very complicated physical motions at very high speeds, something that we could not do with the Kinect. However, we do want to use the Kinect to study the movements of babies in their crib in non-invasive ways.

It is possible that the wires and gear influence the actors’ performance, but we make every effort to insure that they have free range of motion. Also, the body adapts quite rapidly (for example, we don’t often worry about our clothes interfering with movement). We doubt that this could affect the performance of our participants. However, we can compare the earlier trials and the later trials of the experiments to find out if any significant differences emerge during the adaptation.

Thank you for sharing our work with Blender Artists! I hope everyone who has visited from that community has enjoyed it.

-

I was watching this a few days ago and thought I should share. It is supposed to be better than the Kinect in terms of resolution http://www.youtube.com/watch?v=_d6KuiuteIA

-

It is indeed impressive — but it only has about 1 square foot of sensor space — in other words, your hand need to be within about 18 inches of the box. It wouldn’t work for full body.

-

While it is true that we would not be able to use this technology for full body motion recording, we also perform some experiments that involve only arm movement (i.e., reaching to an object). For that sort of task, I think this technology may be quite promising. Granted, that scope of applications for us is fairly limited, but it can still be made useful. Thank you for posting this, Elio!

-

great work fellows!

-

Thank you! :)

-

Great work. What do you see as extensions of these?

-

Thank you for visiting our poster, Claudia! To answer your question, we intend to expand beyond the egocentric scope of the task presented by asking participants to differentiate between the motions of other people who they interact with and can recognize, strangers, etc. We plan to diversify the motions beyond the two sports routines used in this study and also to have males and females perform the opposite routine (i.e., martial arts for the females who had performed a tennis serve) and repeat the task to see whether the gender differences we found in this experiment persist. We also plan to expand the task to the autistic population to see how individuals with social impairments perform relative to unimpaired participants. This is just the beginning, we have opened up many avenues to explore.

-

Great job! The project is clearly defined and its potential impact holds great contributions to this field.

-

Why thank you, esteemed colleague!

-

Further posting is closed as the event has ended.

Judges and Presenters may log in to read queries and replies.