Awesome work! Why did you chose the physical reality when it can be so constraining? Have you considered using a virtual world? is there any sound based signal that could be combined with your visual based signal? What is the time scale of the signal that you are using now? How does that map onto the time scale of actual physical movements that the person may have once performed or perceived?

Best of luck in the competition -Liz Torres

-

-

Thanks for your questions Professor Torres!

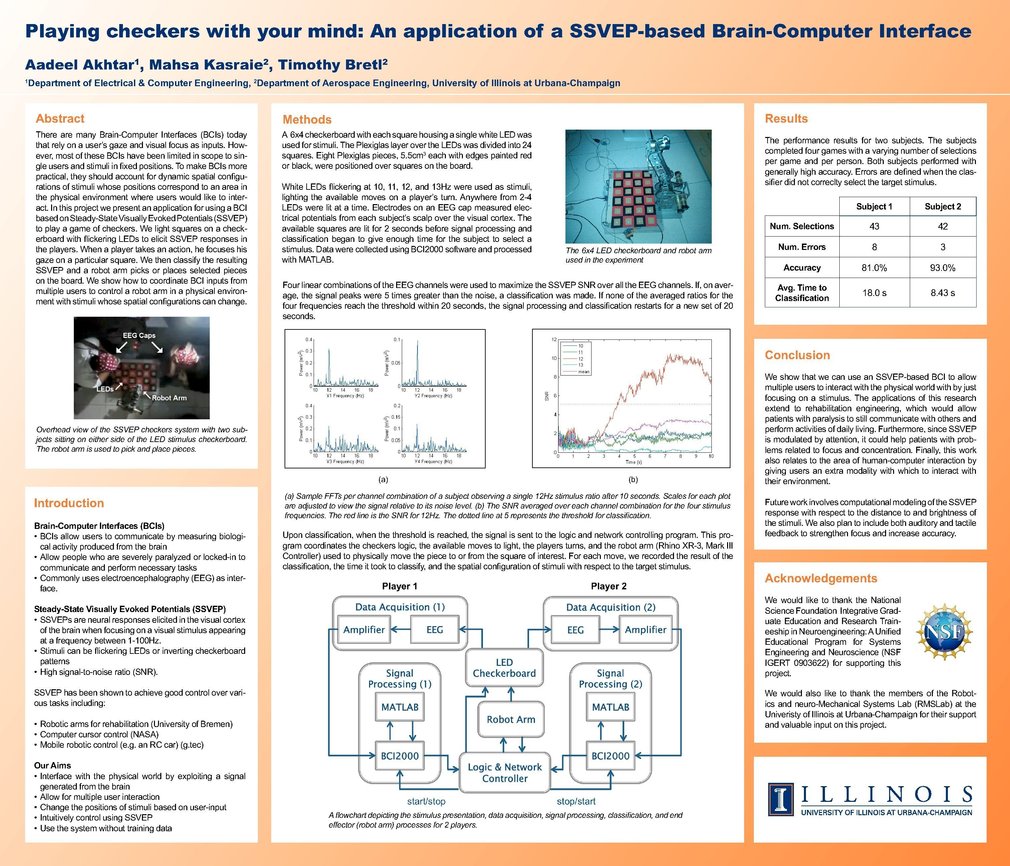

1) When using projectors or computer screens there’s the added issue of the refresh rate of the monitor or projector which can limit the number of possible stimuli. Furthermore, it is easier to change the brightness of individual LEDs by adjusting the voltage going across it, and we plan to study the effects of brightness on classification accuracy. That being said, we do have future plans for implementing SSVEP in a CUBE or CAVE virtual reality environment.

2) One of our lab members is currently exploring the use of audio and tactile feedback to enhance concentration on the LED stimulus.

3) As far as time scale goes, we take an FFT every 1/32 seconds, though it may take at least a couple seconds for the neurons to lock to the frequency. As a result, SSVEP is slower than the actual time scale of physical movements, but faster than most other brain-computer interface paradigms like P300 or motor imagery.

-

Further posting is closed as the event has ended.

Judges and Presenters may log in to read queries and replies.